29. October 2024 By Milena Fluck and Daniel van der Wal

Creativity: Cognition and GenAI - Part 3

‘I never made one of my discoveries through the process of rational thinking.’

Albert Einstein

Be it through reinforced or monitored learning. For years, we have been trying to get virtual agents to learn, act rationally and make optimal decisions. And all this as efficiently and accurately as possible. Creativity goes in exactly the opposite direction. Detached from the known, the obvious and the rational, we want to create and implement useful and new things. Generative means having the power or function to create, produce, or reproduce something. This ‘something’ can be original, new, and useful. Only then are our defined requirements for creativity fulfilled. To be able to assess the extent to which a generative artificial agent has creative potential, behaves creatively, and produces creative products, we need to take a closer look at how it works.

The generative adversarial network (GAN)

A GAN is an architecture in the field of deep learning. With the help of a GAN, for example, new images can be generated from an existing image database or music from a database of songs.

A GAN consists of two parts: the discriminator and the generator. In the figure above, the ‘Real data’ shows real data, for example pictures of dogs, which we pass to the discriminator. Based on its prior knowledge, the discriminator decides whether the picture is real or not. Depending on whether the judgement was right or wrong, the model adapts retrospectively, learns and is more likely to judge the next picture correctly. On the other hand, we have a generator. This receives a real image and changes it – adds noise – by, for example, putting a hat on the dog. This image is also passed to the discriminator for evaluation. If the generator does not pass the authenticity check with its modified image, it adjusts its model to better imitate reality the next time. The aim is for the generator to take what it knows of reality and create something new that is so close to reality that the discriminator considers it to be real. The generator's goal is not to create something new or useful. Without specific instructions, it has no way of knowing what is useful to us.

Could a GAN create lizards and horses with wings in rainbow colours just because it thinks, ‘That hasn't been done before and everyone will think it's really cool’? If so, then only by coincidence. Every generated image remains a response to a task and tries to stay as close as possible to the given context and the task. Caution: subjective perception! To get results that are further removed from reality, it helps to provide clues about a different – non-real – world. For example, ‘It is a steam punk world’ or ‘it is a fantasy world’.

The cognitive processes underlying creativity or divergent and convergent thinking in humans have not yet been fully researched. Creative thinking is an iterative process of spontaneous generation and controlled elaboration. It is closely related to the learning process.

Important aspects are:

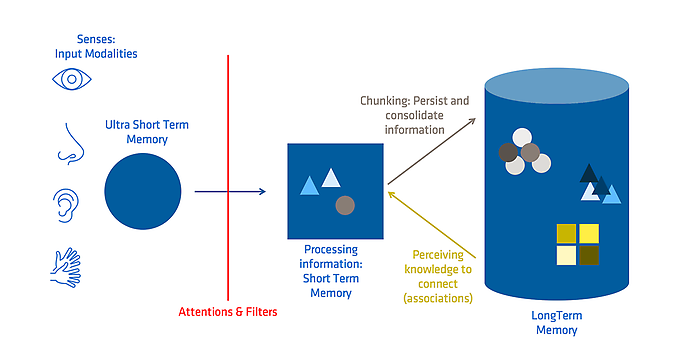

- Attention: The ability to filter, absorb and process the information that flows towards us. Visual information remains in the ultra-short-term memory (also called sensory memory) for up to 0.5 seconds and auditory information for up to two seconds, until it is decided whether it will be processed further at all.

- Short-term or working memory: Four to a maximum of nine ‘information morsels’ (chunks) can be stored and processed simultaneously.

- Long-term memory: Here we can store information for a second or an entire life as a schema.

When we come across new relevant information and decide to process it further, this is always associated with a retrieval of knowledge that is already stored in our long-term memory. Every instance of information processing leads to changes in existing schemata.

But which information from our long-term memory do we need to link and store the newly acquired information in a meaningful way later on (chunking)? Each of us has our own schemata and sees different connections. The assumption that ideas are linked (learned) in the form of simple cognitive elements is called association.

‘If you think about things the way someone else does, then you will never understand it as well as if you think about it your own way.’

Manjul Bhargava (Fields Medal Mathematics, 2014)

Associative thinking is a mental process in which an idea, thought or concept is connected or associated with another idea or concept, often seemingly unrelated. Depending on culture and experience, people have more or less similar thought patterns, and we often make similar associations. For example, in one study, people were asked to draw a species of animal with feathers. Most subjects tended to give the creature wings and a beak as well. We tend towards ‘predictable’ creativity, in which we do not stray far from reality. Searching outside of a contextual solution space and creating something useful from it is cognitively very demanding.

With the help of certain techniques and ways of thinking, we can succeed in breaking through existing contexts and ideas by imagining or thinking about two contradictory concepts at the same time. Some creativity techniques will be presented in the next part.

Janusian Thinking, for example, is the ability to create something new and valuable from two contradictions. Albert Einstein, for example, imagined that a person falling from a roof is simultaneously at (relative) rest and in motion – a finding explained by his general theory of relativity. Thanks to, among other things, huge knowledge bases and semantic networks, the associative ability of generative artificial agents is impressive. Let's take a closer look at this ability.

RNNs, LSTMs and Transformers

Recurrent Neural Networks are used to translate large amounts of text into another language. The text is processed sentence by sentence and word by word:

- The horse doesn't eat cucumber salad.

- The animal prefers carrots.

- But don't feed it chocolate.

In the second sentence, we no longer find the horse. Fortunately, we know from our knowledge database that a horse is an animal. And who is ‘it’ in the third sentence? To be able to answer these questions later, a context must be preserved and passed from process step to process step (a hidden state).

Our text body is very small. If we want to translate an entire book, the transferred hidden state grows with each step and becomes unpredictable over time. Similar to how humans can hold information as a context for the next process for different lengths of time depending on its relevance, Long-Short Term Memory (LTSM) was introduced as a kind of RNN. We store information that we only pass on to the next process briefly (hidden state) and globally relevant information for longer (cell state). But even this is not optimal and can fail, especially with long texts. The sequential processing of texts while maintaining the context has disadvantages. Even if we manage to use LTSM to keep only what we consider important in the context, the context will continue to grow and potentially necessary information will be lost.

As a solution to this problem, developers at Google presented a paper in 2017 entitled ‘Attention is all you need’.

Attention – the missing element in the process. We humans rarely read a text word for word. We keep an eye on the context and focus our attention on what is relevant at the moment. Not every word is equally important. Some are ‘skimmed’. We look at information from different angles. While humans can only process a maximum number of blocks of information due to the limitations of working memory, the transformer is much faster thanks to its multi-perspective attention. This enables the transformer to encode multiple relationships and nuances for each word. Incidentally, according to Ashish Vaswani of Google Brain, the transformers were named that way because ‘Attention Net’ would have been too boring. So, a generative artificial agent tries to recognise the most important information on the one hand and to extract as much contextual information as possible on the other, in order to provide us with the most realistic result possible through corresponding associations.

Now that we have a superficial understanding of how it works, we know from the human process that new and potentially useful associations – ideas – arise from retrieving existing information and linking new and existing blocks of information or schematics. To create original links, information must not only be retrieved, but also first found and put into an obvious context.

The words ‘The horse does not eat cucumber salad’ were the first words spoken through a telephone. Philipp Reis was a teacher in Hessen and built an ear mould for his students. He was successful. At the same time, the apparatus was able to reproduce sounds of all kinds at any distance using galvanic current. To prove that the spoken sentences were actually arriving at the other end and were not memorised, he chose to transmit whimsical, spontaneously spoken sentences. But the sentence is not that strange.

Mr Reis's aim was to choose a word that is semantically far removed from reality. It should be original. In principle, a horse eats vegetables. You would have to try it, but it cannot be ruled out that a horse might exceptionally reach for a cucumber salad when it is moderately to severely hungry. When a generative artificial agent generates text, it proceeds in a similar way. It travels through semantic space until it finds something suitable and completes the sentence. To arrive at original and possibly useful answers, we have to travel further through semantic space. This increases the number of alternatives whose usefulness must be evaluated.

The goal of a generative artificial agent is not to give us the most original answer possible, but to stay close to reality and to answer ‘realistically’. In the case of rice, too, we have not come far in the semantic space.

When we asked ChatGPT-3 to complete the sentence ‘Horses do not eat...’ we received ‘cucumber salad’ as an answer with the biography of Philipp Reis. When we asked for alternatives, ChatGPT-3 returned ‘oats’, ‘corn’ and ‘wheat’. ChatGPT-3 ignored the ‘no’ in the sentence and then gave us the most obvious alternatives. The answer was a little more appropriate for our English attempt ‘The horse eats no...’, where ChatGPT-3 gave us ‘hay that has not been thoroughly inspected for mould and contaminants’ as an answer. A very rational answer. Not original, but useful. When we asked ChatGPT-3 for more ‘creative’ answers, we got ‘stardust’, ‘moonlight’ and ‘rainbow’. This showed that ChatGPT-3 interpreted the request to be ‘creative’ as a request to move further into the semantic space. ChatGPT-3 was able to confirm this to us when asked, ‘So when I ask you to give me a creative answer you travel further within your semantic network?’:

ChatGPT-3: ‘Yes, when you ask for a creative answer, I effectively “travel further” within my semantic network. This means I explore a wider range of nodes (concepts) and edges (relationships) to draw from more diverse and sometimes less directly related areas of knowledge.’

People we perceive as more creative often have more flexible semantic networks. There are many connections (edges) and short paths between the individual concepts (nodes). You can find out how good you are at associative thinking with the Remote Associate Test. You are given three terms (fish, rush, mine) and have to find the term that connects all three (solution: gold => goldfish, goldrush, goldmine). A free page with examples to try out is available here. We also tested ChatGPT-3 and reached our limits. To try it out yourself, we recommend the following prompt: ‘With which word can we combine these three words in a meaningful way? palm, shoe, house’.

Associations are relevant for divergent thinking and creativity. However, association is not the only way to generate creative ideas. Other cognitive processes include:

- Generalisation: Extracting common characteristics from specific examples to form a general rule or concept (e.g. all birds have feathers).

- Analogy: Mapping the relationships between elements from two different domains to highlight similarities (such as the brain is to a person what the CPU is to a computer).

- Abstraction: Focusing on specific details to concentrate on the core principles of a concept.

An example of abstract thinking: chameleons, octopuses and arctic foxes camouflage themselves with the help of the colour of their skin or fur to avoid being seen by their enemies or prey. We can now extract this concept as abstract knowledge and apply it. Today, for example, we find it in military camouflage, where it also serves to avoid being detected by the enemy or prey.

Generalisation, abstraction and analogies are currently being intensively investigated by science. The generative AI market is in the process of testing potential solutions, such as structure-mapping engines (SME). In the field of analogies, there have already been some breakthroughs, known as ‘domain transfer’. However, it seems that we are still a long way from technologies that have human-like abilities in terms of abstract thinking. Even if ChatGPT-3 is convinced of its abilities. For those who want to learn more about this topic, we recommend this scientific paper by the Santa Fe Institute as a starting point.

Would you like to learn more about exciting topics from the adesso world? Then take a look at our previously published blog posts.

Also of interest: