16. April 2024 By Stephan Jager and Thorben Forke

Data chaos at the touch of a button

Challenges and risks when using Microsoft Copilot for Microsoft 365

The marvellous world of AI seems more limitless than ever. The topic is omnipresent, not least due to Microsoft's strong investment in OpenAI and the subsequent release of Copilot for Microsoft 365 as an AI assistant in the Office applications used on a daily basis in November 2023. The potential of Copilot for Microsoft 365 in terms of increasing productivity and optimising processes can be seen from the very first use. But for most companies, it's a rocky road to get there, with the typical questions that always need to be answered when introducing new Microsoft services. Simply "switching on" Copilot for Microsoft 365 harbours the risk of drowning in data chaos. In this blog post, we show the most important governance aspects when introducing Microsoft 365 Copilot and other AI services based on Large Language Models.

When introducing Generative AI, the first step is usually to look at the potential areas of application for the technology. Why should we as a company use AI? In which business areas, in which applications? What do possible use cases look like? And to what extent are existing use cases transferable to my company? Many companies are already past this stage and know where and in which areas AI services such as Copilot for Microsoft 365 should be used in the future. The question then arises as to how AI services can be implemented and securely introduced in the company. We distinguish between two dimensions:

- 1. Enablement, i.e. empowering employees to use AI services such as Copilot in the Microsoft 365 apps (Outlook, Teams, Word, Excel, PowerPoint, etc.) through training, prompt training and accompanying change management measures.

- 2. Governance, i.e. the development and implementation of rules and framework conditions for the secure and compliant use of AI services in your own organisation.

In the following, we present the various governance aspects and the respective risks and challenges associated with non-compliance.

Challenges and risks when introducing M365 Copilot

Deploying Microsoft 365 Copilot is quick and easy. Once an administrator has issued the licence, you can actually get started. However, it is not uncommon for disillusionment to quickly set in and the following problems occur: Employees are dissatisfied with irrelevant results. These either contain outdated information, are inaccurate or completely miss the point. The hoped-for relief from the AI assistant therefore fails to materialise.

"If you digitise a shit process, you have a shit digital process."

Thorsten Dirks (former CEO of Telefonica Germany)

Even if the famous quote from Thorsten Dirks does not apply one hundred per cent here, it does apply to the use of AI assistants such as Microsoft 365 Copilot. If the AI is fed with old, irrelevant or redundant data, you also get old, irrelevant or redundant answers. This problem is often referred to as the "shit-in, shit-out principle".

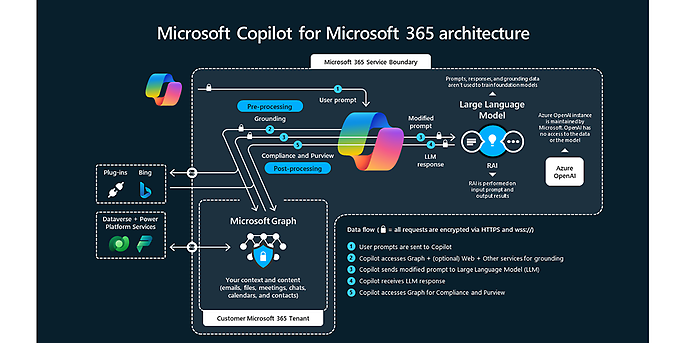

A further challenge arises when employees receive information from the AI assistant that they are not authorised to receive. The system outputs information that the user does not know and, in the worst case, is not authorised to know. This problem, often referred to as "oversharing", is due to a lack of control over existing authorisations. This problem has often existed in the company for some time, but the use of AI assistants only makes it more pronounced. This is because the grounding process that takes place when processing the prompt and generating the result (responses) means that Microsoft 365 Copilot uses all files and information to which the executing user account has access. It is irrelevant whether the user is aware of the authorisations. The user also does not have to search for keywords that appear in the file name, title or content. With the help of the "Semantec Index", the AI independently establishes relationships between the information (e.g. synonyms) and returns them contextually. This makes the problem of oversharing much more visible and even more explosive than in enterprise search projects, for example.

Microsoft-Darstellung der Datenverarbeitung inkl. Grounding-Prozess, Quelle: https://learn.microsoft.com/de-de/microsoft-365-copilot/media/copilot-architecture.png

Expectations of the AI assistant are high. It is not uncommon for initial curiosity to give way to disillusionment when good results fail to materialise for the reasons mentioned above. During the introduction, it is therefore just as important to organise the licensing sensibly and to define clear user groups and suitable use cases so that the added value of the deployment is in reasonable proportion to the effort involved. Another phenomenon in many customer projects is inadequate offboarding processes, which lead to deactivated but still licensed user accounts.

The interim conclusion can be summarised as follows: The use of Copilot for Microsoft 365 brings with it some (typical) challenges which, in the worst case, can also have data protection consequences in the event of oversharing.

Organisational measures for optimised data storage

As already described, "simply switching on" can quickly lead to disillusionment when using Microsoft 365 Copilot. To avoid this disillusionment, the following tips and tricks can help to significantly improve data storage:

The first measure against the shit-in shit-out problem is to clean up old, redundant and irrelevant information. With the help of analysis tools and scripts, information that has not been opened for a long period of time can be quickly identified and cleaned up. Similar to a "spring clean" at home, the quality of the stored data and therefore the relevance of the AI results can be increased. In addition, care should be taken to ensure which version of the data is really up-to-date. It is not uncommon to see documents in projects that are labelled V0.1 to V1.8, for example. In most cases, these intermediate versions are never opened again as they are no longer relevant. It is advisable to delete these obsolete intermediate versions. If these older versions of a file are still required, the version history in SharePoint Online can help.

A very simple and also very effective measure is to organise the data in logical directories and sites. Assuming that a company uses SharePoint Online and Microsoft Teams as its primary data repository, it is advisable to set up separate data rooms for the individual departments, projects and application areas. Ideally, HR data should be stored in a separate HR library and IT documentation in a document library of the IT organisation. This helps with authorisation management and also makes it easier for AI to establish the relevance of content to a specific topic.

The tagging of files and the use of metadata in the context of SharePoint is a further aid. It not only helps users to find their way around the data more quickly (e.g. via views, groupings and filters), but also helps the Microsoft 365 Copilot to better understand the context of the information.

As a final tip, we recommend standardised naming of documents. The aim should be to establish a standardised strategy for naming documents so that relevant information can be found more quickly or used by the AI.

Of course, this reads much easier than it is to put into practice. In quite a few companies, chaotic conditions prevail in Microsoft Teams, SharePoint Online or FileShare. This is why we urgently recommend that the necessary measures be accompanied by holistic change management. This is the only way to establish an understanding of the rules of the game when dealing with Microsoft 365 Copilot.

Technical measures for mapping data governance

In addition to the organisational measures that should be implemented, there are also a number of technical measures that need to be considered. Here are our top five possible measures at a glance:

- 1. Implementation of retention and deletion policies using Purview Lifecycle Management 1.

- 1. How long must data be retained? The legal regulations must be observed here.

- 2.When should data be purged automatically to avoid renewed chaos?

- 2. Establishment of a document classification strategy, for example based on Purview Information Protection:

- 1. who is authorised to access which information and data?

- 2 Please note that Microsoft Copilot for Microsoft 365 also requires rights in order to process data.

- 3. Continuous review of existing authorisations (access reviews):

- 1. Review of access to Teams, SharePoint (online) sites and FileShares (recertification),

- 2. Deletion of authorisations that are no longer required and

- Identification of breaks in the inheritance of authorisations, for example due to releases.

- 4. Evaluation and clean-up of shares in the context of SharePoint Online and OneDrive for Business:

- 1. organisational shares,

- 2. anonymous shares and

- 3. generated share links via Teams Chats

- 5. 0ptimisation of licence usage

- 1. monitoring the use of Microsoft 365 Copilot,

- 2. deactivation of licences for inactive users and

- 3. reduction of storage costs (reduction of necessary licences) through tidy data rooms.

Is governance alone sufficient?

The answer is: NO. The above recommendations help companies and the artificial intelligence used, such as Microsoft 365 Copilot: Microsoft 365 Copilot, to use data better and more securely. However, these measures are not enough to protect your data from unauthorised access. We would therefore like to take this opportunity to emphasise that it is essential to create a security strategy and implement security measures in accordance with the Zero Trust model. However, this topic is so extensive that separate information material is available on our channels.

Summary

By taking the above points into account in the area of Copilot governance, the risk of unwanted data chaos at the push of a button is sustainably reduced. Strong governance, not only in the area of Microsoft AI, creates trust and acceptance for the use of the service in productive operation. True to the motto "order is half the battle", copilot governance is the decisive prerequisite for the secure use of Microsoft AI services in your own organisation. It is important to establish clear guidelines and processes for the use, storage and classification of documents and data in order to ensure effective and secure use.

If appropriate measures and guidelines are not properly established and implemented by employees, the risk of security incidents or compliance breaches increases significantly. The use of AI now makes these risks visible even more quickly and has an even greater impact thanks to the intelligent data processing of AI.

Would you like to find out more about exciting topics from the world of adesso? Then take a look at our previous blog posts.

Also interesting: